AIGC Trends in 2023

Part 3 of "New trend of technology in 2023 that you must pay attention to"

In 2022, an AI painting "Space Opera House" generated by Midjourney turned out to make AIGC popular. At the same time, Stability.ai and Jasper.ai (metaverse and digital media tool developer) have completed large-scale financing in the current market environment with valuations of US$1 billion and US$1.5 billion respectively. The superposition of these two events acted as a catalyst to ignite the market's enthusiasm for AIGC.

The era of large models initiated by OpenAI has naturally increased the research and development cost of the cornerstone model, resulting in a limited number of players who can participate in this game. In addition to financial costs, the data accumulation advantage of the first mover further consolidates the leading position of the foundation model, which may eventually form the status of IOS and Android over Mobile, and the companies that form the platform provide the foundation for the application layer companies through APIs. Facility services, just like Jasper, Copyai, and Notion all use GPT-3 now.

The field of image generation is in the stage of exploring a large number of application scenarios, while the generation of video and 3D content is still waiting for the cornerstone model similar to the progress of DALL E. At the same time, in the field of text generation, GPT-4 is expected to be released next year. Judging from the effect of ChatGPT, which is known as "GPT-3.5", it is very worth looking forward to.

Trend: AIGC applications built on the platform-level cornerstone model are ushering in an explosion

Compared with autonomous driving, AIGC faces relatively few regulatory issues; compared with VRAR, AIGC does not require additional hardware equipment.

Practitioners of knowledge-based and creative jobs are the most directly affected by AIGC, mainly including marketing personnel, sales personnel, writers, image workers, video workers, etc. AIGC’s generation forms can include material-based partial generation, instruction-based fully autonomous generation, and generation optimization. In terms of content, in addition to common explicit content such as text, images, audio, and video, it also includes non-explicit content such as behavioral logic, training data, and algorithm strategies. Ideally, almost all occupations can be improved by AI.

The application of AIGC in the direction of text generation: can be a marketing expert, creator and programmer

1) Marketing copywriting application: high degree of commercialization and fiercest competition

At present, the most commercialized and most competitive scene in the field of text generation is the generation of marketing copywriting. Leading companies include: Jasper, copy.ai, copysmith, etc. Most of these companies are based on GPT-3, adding functions such as creative templates and SEO search enhancements, providing businesses or individuals with the ability to quickly produce promotional copy. The charging model is generally a subscription model based on the number of words used, and the price is about US$1 per thousand words.

For example, how to use copy.ai to generate a marketing email? Users only need to enter the type of copy they want to generate, the key points they want to cover, and choose the appropriate tone, and AI will quickly generate multiple copy options. After selection, the user can use the modifier to partially rewrite or modify, and finally a complete marketing email is generated. In the same model, except for marketing emails, any marketing-related advertisements, blogs, and social media are gradually covered by AI-generated text.

From a product point of view, the entry barrier for marketing copywriting is relatively low. The main barriers for such companies lie in the accumulation of users and data. First-mover companies have certain advantages. On the one hand, in the early stages of the industry, the cost of acquiring users is relatively low. On the other hand, because users will modify according to their own needs after generating drafts, a large amount of output content adjusted by users can become an important data asset for the company's optimization model. The flywheel effect we often mention is very obvious in the optimization of AI models. After obtaining high-quality training data, leading companies will form a greater competitive advantage in fine-tuning the efficiency of the model.

So we can see that a big company like Notion, which is deeply related to text productivity, will soon end up doing the same thing. They themselves have a large user base, and the product itself has been embedded in the user's workflow. It is very fast to rely on the same cornerstone model to do the same thing.

It is more difficult for startups in this field to compete with the current leading Jasper, copy.ai or the big companies that are about to die. There may be opportunities for some people with special expertise in a certain application scenario to use AI to scale and softwareize their cognition in a specific field. For example, Regie, one of his founders is doing market strategy and revenue growth. Senior experts, so Regie started from the sales team using content generation.

Compared with Draft company (Draft is a company incubated by YC), it is different from the model of AI generation + user adjustment. What Draft provides is the content generated by AI after modification by writers, that is, the model of "AI generation + professional adjustment". So a lot of their customers are very early stage companies. Therefore, the positioning of Draft is to directly help the company to produce the final draft. The charging model is a project-based fee based on the number of words, and the price per thousand words is 200 US dollars.

2) Generation of creative long texts: small commercial scenarios and high technical difficulty

The essence of marketing texts is actually the output of creative content based on the template framework, and the length is often limited. The application of creative long texts in the true sense is not yet mature. Basically, for articles of more than 1,000 words, it is difficult for AI to maintain contextual relevance and logic. The solution to this problem requires targeted model training or further improvement of the underlying model. iterate. Since the commercialization scenarios of creative long texts are relatively small, there are not many startups in this field.

The current solution for long texts is to divide long texts into short texts and create them separately. For example, for a long blog, AI will first generate an outline of the article, and after the user modifies the outline, the corresponding content will be generated according to the theme of each part. Or form a novel or game world view through human-computer interaction. Like the AI Dungeon under Latitude, it adds a plot setting on the basis of man-machine dialogue. Players can create a story with AI through the three commands of Say/Story/Do.

3) Code generation: high penetration rate, many risks and vulnerabilities

In addition to various types of text, code generation is also an important scenario for AI to generate text. Tools like Github Copilot can bring great efficiency improvements to programmers. And the current penetration rate is already very high. Among the newly generated codes in many programming languages, nearly 30% of the codes are completed with the help of AI.

The AI code generation model is mainly based on a large-scale language model and retrained with code data similar to Github. Therefore, major players active in the field of code generation include Microsoft (Github Copilot), Amazon (CodeWhisperer), Google, Salesforce (CodeGen), and tabnine. The main scenarios are the generation of single-line code and multi-line code.

For start-up companies, it is relatively difficult to compete with big manufacturers in this field. Because Internet giants have a large amount of training data and they are the largest users, they can optimize their models well during internal use. At present, one direction that start-up companies are doing is to add AIGC on the basis of no-code and low-code. For example, debuild combines AI with the technology of low-code website generation.

The current rules and laws related to code generation have not kept up with the speed of technological progress, and there are many regulatory gaps, which means that AI code generation is accompanied by many risks of code copyright infringement and code leakage.

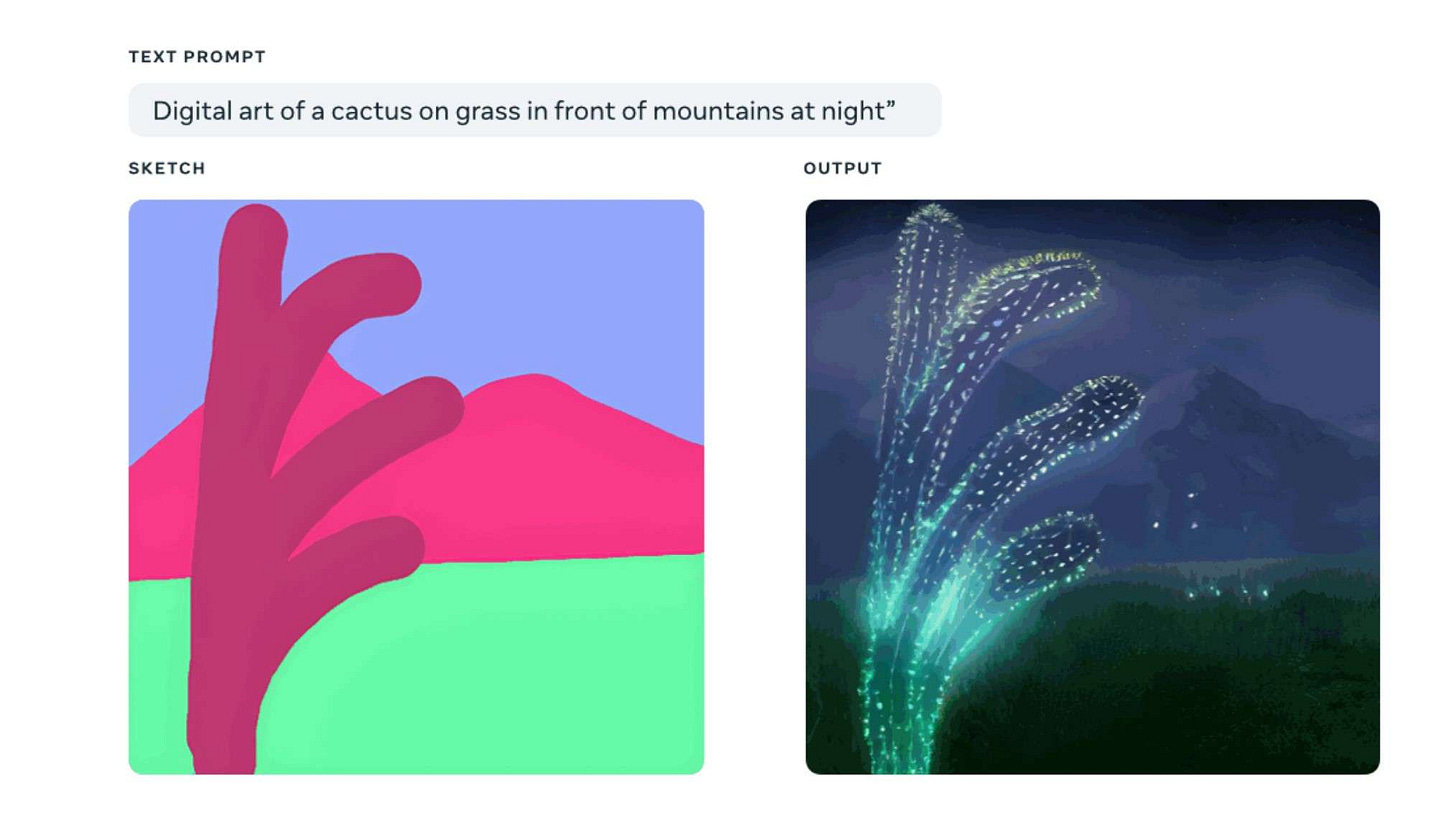

The application of AIGC in the direction of image generation: for illustrators and AI tool enthusiasts

AI-generated images have become popular in the AI circle since DALLE 2, but the real popularity is from the appearance of the open source version. Disco Diffusion (the predecessor of Midjourney), which was the first to appear to the public, is mainly used by illustrators and AI tool enthusiasts. Then Stability AI appeared. They aimed to create an open source and co-created model, and finally pushed AI-generated images to the common people. His model Stable Diffusion can be understood as an open source version of DALLE 2. Users can not only directly use the Dream Studio deployed on the official website to directly generate pictures, but also create their own customized models based on the open source model. It can be said that Stability AI has completely lowered the threshold for AI-generated images. Stable Diffusion has accumulated more than 10 million daily active users in various channels, and the consumer-oriented Dream Studio has gained more than 1.5 million users. From the increase in the number of Github Stars Speed can also be seen in its rapid development.

1) C-end scenario: the subscription model based on usage-based payment

At present, the business model of AI painting on the C side is relatively simple, and it is generally a subscription model that pays by usage. For example, DreamStudio under Stability currently provides 200 free generation quotas, and the cost of each 100 generation after the trial is 1 pound. The CEO of Stability said that the future business model will be similar to that of Red Hat, that is, the open source version is free and the commercial version is profitable. Midjourney adopts a subscription system, and new users can generate 25 cards for free; then they are charged according to the usage time of the GPU, Basic Plan - $10 per month corresponds to 200 minutes of GPU time (about 5 cents per generation); Standard Plan - $30 per month corresponds to 900 minutes of GPU time (about 3 cents per spawn).

For ordinary C-end users, the commercial application scenarios are limited, and the willingness to pay is not high. According to a report, 60% of users have not paid for AI painting, and users who try new things account for a large proportion. If it is an independent artist or designer who wants to use it for commercial purposes, it is limited by materials and technology. To meet the needs of customers, it still needs a lot of processing to obtain the finished product. For example, after the original "Space Opera House" was generated by AI, the designer made thousands of revisions, and it took nearly 80 hours to complete the finished product. Therefore, on the basis of the cornerstone model, exploring more B-side commercialization paths may be the direction of future development.

2) B-end scene: 100 billion-scale animation market, interior design and 3D content application

The primary focus of the B-end market is the field of comics and animation. The global animation market will be about 391 billion US dollars in 2022. The applicable market is huge, and it can solve the problem of animation production costs to a certain extent. A 1-minute 2D animation requires at least US$10,000 in production costs, corresponding to 72 pictures, and the cost of each picture is at least US$130. According to the charging standard of DALL-E 2, the price for generating 8 images is USD 0.26 (assuming that 8 iterations are required to generate the required images). In contrast, the cost reduction brought by AI is hundreds of times. At the same time, as the most significant advantage of AIGC, the production efficiency of drafts can greatly speed up concept testing and production efficiency in the animation industry.

At present, many studios have embraced AI. For example, Toei Animation, the production company of One Piece and Dragon Ball, will cooperate with PFN (Preferred Networks) to test AI animation production in 2021. It can be seen that based on the advantages of AIGC in content generation speed and cost, with the advancement of the underlying model and the optimization of the vertical model, B-side application scenarios with high production costs and long production cycles have the potential for commercialization.

The same logic can be applied to other areas: such as the generation of game assets. Scenario is a company that creates and designs in-game 3D assets. Users can shoot real-life 3D objects through video and then generate corresponding models in the game. Scenario has a large amount of in-game 3D asset data. On the basis of stable diffusion, Scenario has launched an AIGC tool for game developers and game designers, focusing on generating various game assets.

Another example is the generation of interior design. Interior AI (indoor artificial intelligence) is trained on the basis of Stable Diffusion through pictures of interior design. Users only need to upload photos of existing rooms, and the model can generate new designs based on the existing designs according to different styles selected, and present them to users simply and quickly.

In addition, the market size of 3D content should not be underestimated. According to relevant statistics from businesswire , the global 3D animation market will exceed US$20 billion in 2022. Compared with the production of 2D content, the cost of 3D content is higher and the production cycle is longer. With the development of Diffusion model and NeRF model, AI has ushered in rapid development in the application of 3D content generation.

3D textures and assets are essential elements in making games, movies and CGI. Creating textures takes a lot of time and money, and photographers have to travel to remote locations to zoom in on real-world objects and photograph them from different angles to create a comprehensive library of textures. Recently, Runway ( an American photo and video AI editing software provider) launched an AI-generated 3D material model based on diffusion, which can quickly generate 3D textures. Although the quality is still far behind Quixel's material library, with the improvement of the model And the accumulation of data, the improvement of quality can be expected.

3D modeling is a market size of nearly 13 billion, and the generation of 3D scenes can be applied to games, VRAR and metaverse. For example , Space Data has trained a 3D city generation model with satellite data based on Stable Diffusion. A set of satellite photos of Manhattan was input into the model, and a comic-style 3D city model was generated in a few minutes.

At the same time, with the development of the NeRF model, the application of 3D modeling in the field of interior design ushered in rapid development. Only a few photos of the interior are needed, and the NeRF model can be used to quickly generate 3D interior modeling, and the overall style can be switched through text instructions. With the further development of this technology, the $4.1 billion interior design software market may usher in a reshuffle.

The application of AIGC in the direction of video generation: text-to-video conversion, video editing, personalized marketing

In the era of video, video has demonstrated its great capabilities in areas such as traffic attraction, content dissemination, and advertising marketing. As the underlying technology continues to expand its capabilities, the application of AIGC has naturally developed from text and images to the field of video. Similar to 3D content generation, video generation with higher technical threshold and market space has become the focus of entrepreneurs and investment institutions.

1) Text to video conversion, clarity and fluency continue to improve

The real text-to-video generation was first published by Google in April 2022, but the picture effect at that time was not very good, such as the clarity and fluency of the video were relatively low. It was not until the appearance of Meta's Make-a-scene, Google's Imagen Video and Phenaki in September that it indicated that the DALLE moment in the field of video generation is about to appear. Google has tried two directions in the field of video generation. Imagen is a Diffusion base model, and Phenaki is a non-Diffusion base model. They have their own advantages and disadvantages in video generation. In a recent Google AI conference, they showed a video generated by combining Imagen and Phenaki, and the video effect of about 50s has been greatly improved.

2) Video editing tools: become an expert in video optimization and automatic editing

The application of AIGC in video editing is mainly divided into two categories:

The first category is the editing of video attributes, such as video quality restoration, deletion of specific subjects in the screen, automatic addition of specific content, automatic beautification, etc. There are two companies in the head, Runway cuts into video editing from the perspective of picture editing; and Descript cuts into video editing from the perspective of sound editing. The entry point is different, but the purpose is to improve the work efficiency of content creators, so that they can focus more on the generation of ideas.

The second category is the automatic editing of videos. Different from the generation mentioned above, the logic of automatic editing is to find and synthesize materials that meet the conditions. To a certain extent, it is a transitional solution for automatic generation. Baidu's video account has a similar function, which can automatically search for relevant content based on the uploaded copy and then produce a video. However, due to the limitation of video resources, the current quality is not very high.

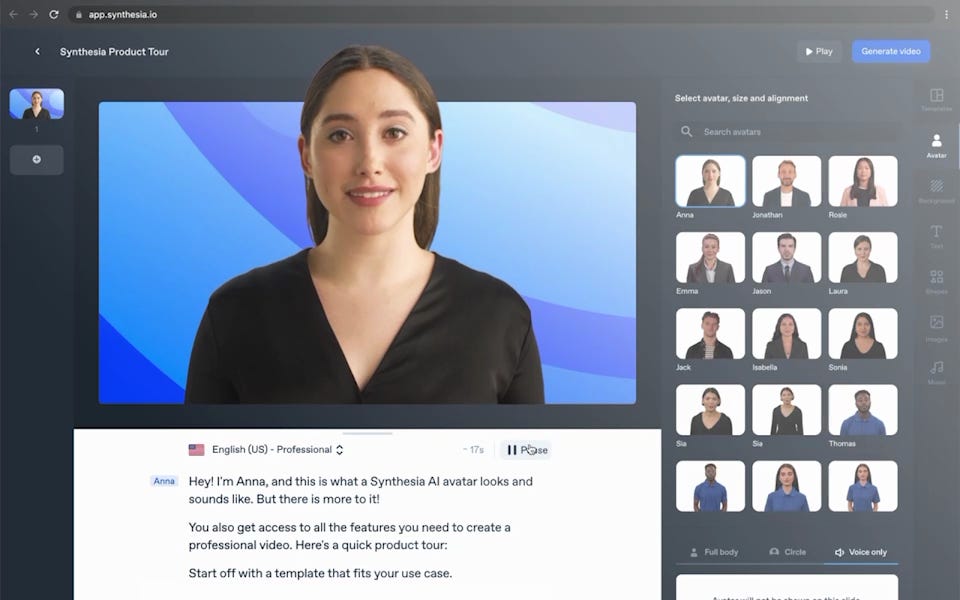

3) Personalized marketing video generation to realize virtual image customization

The application of AI-generated personalized marketing videos is relatively mature. Its presentation form is live oral broadcast. The generation modes are generally divided into two categories. Generate audio and character mouth shapes, and then generate videos in batches by replacing keywords, such as data, names, etc. The second category is that the user records a video by himself, and AI will create a customized virtual person based on the user's data, and then generate videos in batches by replacing keywords.

For example, Synthesia has produced more than 1 million videos for customers since its establishment. The most famous case is the production of Lay’s Potato Chips with Macy’s avatar as the main character. By making different message choices, the user will receive a personalized game viewing invitation from Messi. This type of video is not AIGC in the true sense, and the video is not directly generated through the text-video cross-modal model.

The application of AIGC in the direction of work scenarios: office assistants, improving office efficiency

Decision Transformer (directly use the network to directly output actions, that is, directly make decisions) is another development direction based on Transformer. This model allows AI to learn the scene of human work, so as to generate appropriate decisions. Studies have shown that by 2026, the market size of office process automation will grow to 19.6 billion US dollars. For example, the ACT-1 model of Adept.ai can complete the use of a series of daily office software such as Excel, Salesforce, and Figma through natural language.

ACT-1 (Action Transformer (ACT-1), general-purpose AI assistant) is a browser plug-in that disassembles and executes tasks through text instructions entered by users. The tasks that can be performed include: using a browser to complete search tasks, using office software to complete more than 10 consecutive operations, performing tasks that require the use of multiple software, etc.

For the future, Adept believes that the existence of AI assistants is not only a tool for performing tasks, but also a new way of interaction. Most of the human-computer interaction will be in the form of natural language, not GUI. This allows beginners to quickly use various productivity tools without training. After collaborating with AI, human production efficiency will be greatly improved.

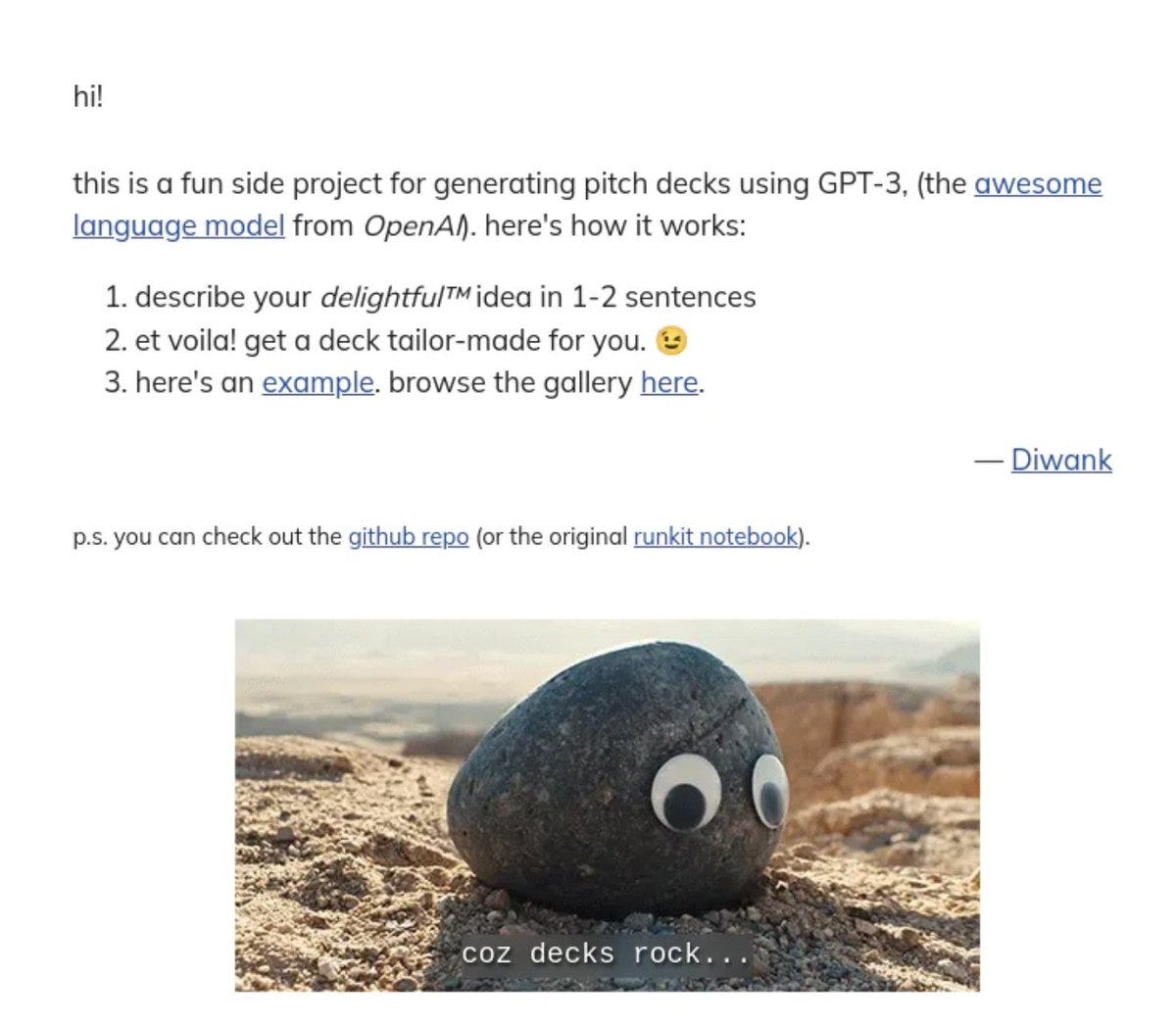

In addition to Adept, there are many start-ups that provide productivity-enhancing tools from vertical fields. For example, DeckRocks can generate a business plan through a language description. When the user enters a text description, GPT-3 will generate the subject content of each page of the business plan; DALL-E ( an artificial intelligence program that can generate images from text descriptions) will generate the company's logo and required illustrations; Producthunt The API will be used to search for detailed information on similar products. And Promptloop is an excel-based productivity tool that can implement functions such as text classification, language translation, data cleaning and data analysis in excel like SUM and VLOOKUP.